Article of the week: Moving up a GEAR: evaluating robotic dry lab exercises

Every week the Editor-in-Chief selects the Article of the Week from the current issue of BJUI. The abstract is reproduced below and you can click on the button to read the full article, which is freely available to all readers for at least 30 days from the time of this post.

In addition to the article itself, there is an accompanying editorial written by prominent members of the urological community. This blog is intended to provoke comment and discussion and we invite you to use the comment tools at the bottom of each post to join the conversation.

If you only have time to read one article this week, it should be this one

Face, content, construct and concurrent validity of dry laboratory exercises for robotic training using a global assessment tool

Patrick Ramos, Jeremy Montez, Adrian Tripp, Casey K. Ng, Inderbir S. Gill and Andrew J. Hung

USC Institute of Urology, Keck School of Medicine, University of Southern California, Los Angeles, CA, USA

OBJECTIVES

• To evaluate robotic dry laboratory (dry lab) exercises in terms of their face, content, construct and concurrent validities.

• To evaluate the applicability of the Global Evaluative Assessment of Robotic Skills (GEARS) tool to assess dry lab performance.

MATERIALS AND METHODS

• Participants were prospectively categorized into two groups: robotic novice (no cases as primary surgeon) and robotic expert (≥30 cases).

• Participants completed three virtual reality (VR) exercises using the da Vinci Skills Simulator (Intuitive Surgical, Sunnyvale, CA, USA), as well as corresponding dry lab versions of each exercise (Mimic Technologies, Seattle, WA, USA) on the da Vinci Surgical System.

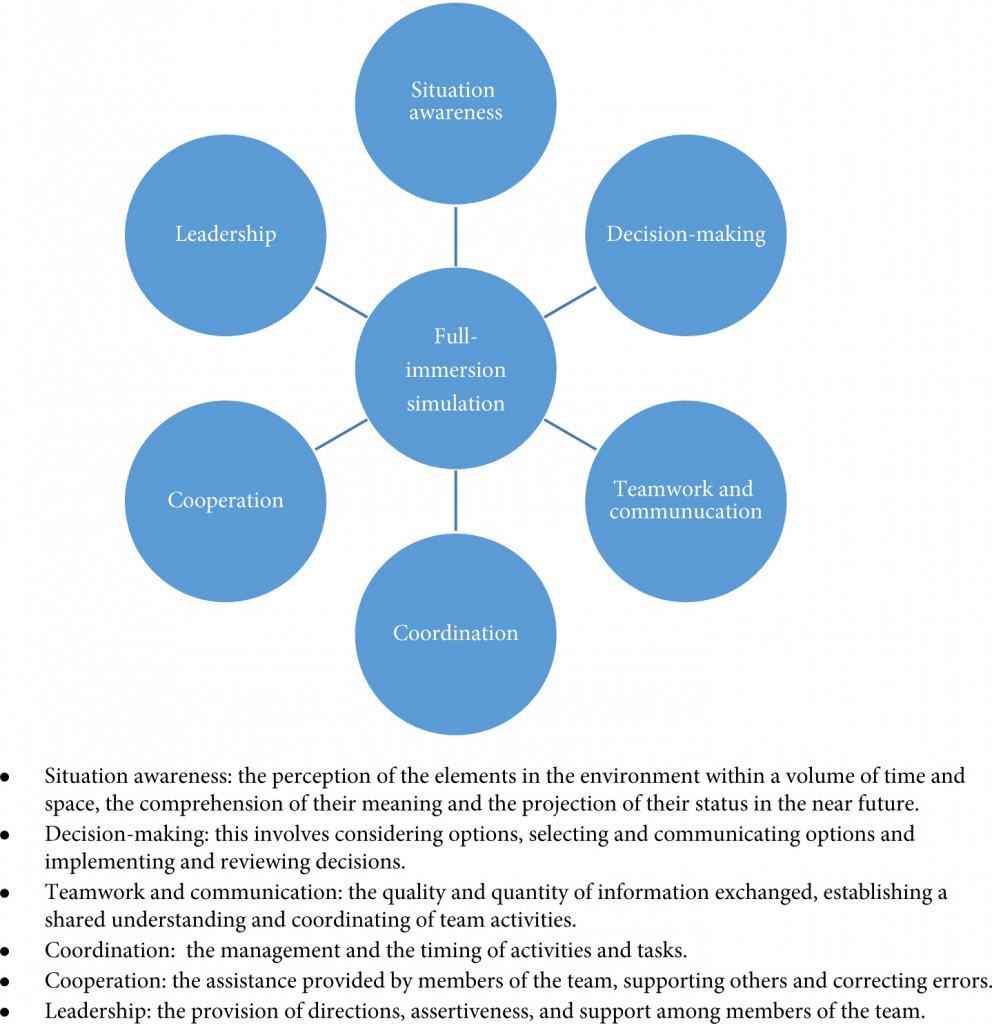

• Simulator performance was assessed by metrics measured on the simulator. Dry lab performance was blindly video-evaluated by expert review using the six-metric GEARS tool.

• Participants completed a post-study questionnaire (to evaluate face and content validity).

• A Wilcoxon non-parametric test was used to compare performance between groups (construct validity) and Spearman’s correlation coefficient was used to assess simulation to dry lab performance (concurrent validity).

RESULTS

• The mean number of robotic cases experienced for novices was 0 and for experts the mean (range) was 200 (30–2000) cases.

• Expert surgeons found the dry lab exercises both ‘realistic’ (median [range] score 8 [4–10] out of 10) and ‘very useful’ for training of residents (median [range] score 9 [5–10] out of 10).

• Overall, expert surgeons completed all dry lab tasks more efficiently (P < 0.001) and effectively (GEARS total score P < 0.001) than novices. In addition, experts outperformed novices in each individual GEARS metric (P < 0.001).

• Finally, in comparing dry lab with simulator performance, there was a moderate correlation overall (r = 0.54, P < 0.001). Most simulator metrics correlated moderately to strongly with corresponding GEARS metrics (r = 0.54, P < 0.001).

CONCLUSIONS

• The robotic dry lab exercises in the present study have face, content, construct and concurrent validity with the corresponding VR tasks.

• Until now, the assessment of dry lab exercises has been limited to basic metrics (i.e. time to completion and error avoidance). For the first time, we have shown it is feasibile to apply a global assessment tool (GEARS) to dry lab training.